proactive harm detection for real-time conversations

The only proactive voice moderation solution that works in real-time

From online games and social media platforms, to delivery apps and contact centers, our voice is what connects us. Phone calls and voice chats can devolve, putting user wellbeing and engagement at risk.

Built on advanced machine learning technology and designed with player safety and privacy in mind, ToxMod triages voice chat to flag bad behavior, analyzes the nuances of each conversation to determine toxicity, and enables moderators, customer success managers, and trust & safety teams to quickly respond to each incident by supplying relevant and accurate context.

Voice-native

ToxMod was born to understand all the nuance of voice. It goes beyond transcription to consider emotion, speech acts, listener responses, and much more.

INTELLIGENT

ToxMod becomes an expert in your game's code of conduct and escalates what matters most with high priority to your team.

SECURE

All user data is anonymized and protected to ISO 27001 standards. Modulate will not sell or rent your data, ever.

PLUG-AND-PLAY

ToxMod ships with a variety of plugins for different combinations of game engine and voice infrastructure. You can integrate in less than a day.

FLEXIBLE

ToxMod provides the reports. Your Trust & Safety team decides which action to take for each detected harm.

DETAILED

Review an annotated back-and-forth between all participants to understand what drew ToxMod’s attention and who instigated things.

Proactively detect when a conversation devolves

Don't wait for your users to file a complaint about a negative experience they've had in your ecosystem. ToxMod is the only proven real-time voice moderation solution today that enables platforms to respond proactively to toxic behavior as it's happening, which prevents harm from escalating.

41%

of Americans have personally experienced some form of online harassment, including offensive name-calling, purposeful embarrassment, stalking, physical threats, harassment over a sustained time, or sexual harassment (Pew Research).

67%

of multiplayer gamers say they would likely stop playing a multiplayer game if another player were exhibiting toxic behavior (Take This).

Use Cases

How ToxMod works

ToxMod triages voice chat data to determine which conversations warrant investigation and analysis.

- Triaging is a crucial component of ToxMod’s efficiency and accuracy, flagging the most pertinent conversations for toxicity analysis and removing silence or unrelated background noise.

- Unlike text moderation, processing voice data is labor intensive and often cost prohibitive, necessitating accurate and reliable filtering.

Second, ToxMod analyzes the the tone, context, and perceived intention of those filtered conversations using its advanced machine learning processes.

- ToxMod’s powerful toxicity analysis assesses the tone, timbre, emotion, and context of a conversation to determine the type and severity of toxic behavior.

- ToxMod is the only voice moderation tool built on advanced machine learning models that go beyond keyword matching to provide true understanding of each instance of toxicity.

- ToxMod’s machine learning technology can understand emotion and nuance cues to help differentiate between friendly banter and genuine bad behavior.

Third, ToxMod escalates the voice chats deemed most toxic, and empowers moderators to efficiently take actions to mitigate bad behavior and build healthier communities.

- ToxMod’s web console provides actionable, easy-to-understand information and context for each instance of toxic behavior.

- Moderators and community teams can work more efficiently, allowing even small teams to manage and monitor millions of simultaneous chats.

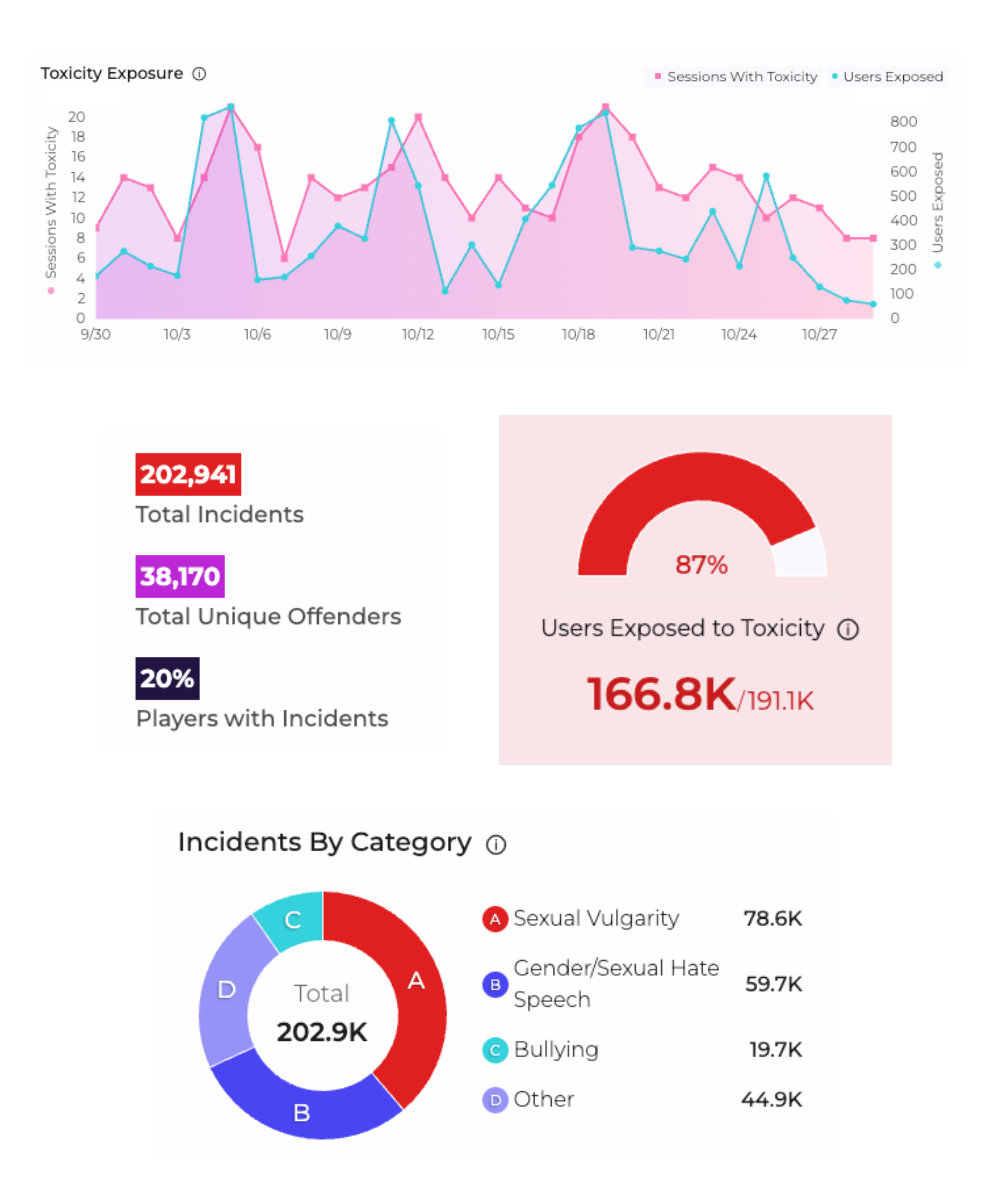

ToxMod In Action

Ready for enterprise

ToxMod provides best-in-class community health and protection services for AAA studios and indies alike

Enterprise-Grade Support

Modulate’s support team goes above and beyond the call of duty to support our customers. Modulate technical teams are available 24/7 to help address any critical issues in real-time.

Available in 18 Languages & Counting

ToxMod can distinguish real harms in multiple languages, and even keep track of context in mixed-language conversations.

Built-In Compliance Readiness

Modulate's team supports customers in writing clear Codes of Conduct, producing regular Transparency Reports, and conducting regular Risk Assessments.

Book a Demo

Learn how ToxMod can help you protect your community and empower your community and moderation teams.

.png)

.png)

.png)